Introduction

AWS ECS is a widely-adopted service across industries. To illustrate the scale and ubiquity of this service, over 2.4 billion Amazon Elastic Container Service tasks are launched every week (source) and over 65% of all new AWS containers customers use Amazon ECS (source).

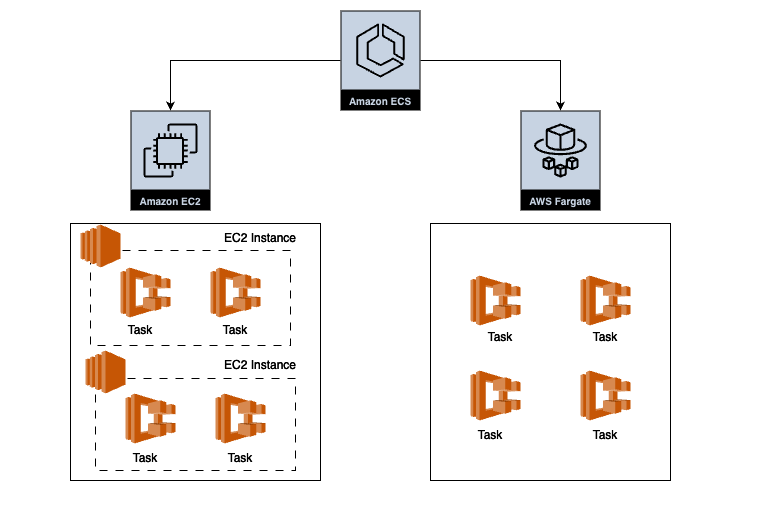

There are two primary launch types for ECS: Fargate and EC2. The choice between them depends on factors like cost, performance, operational overhead, and the variability of your workload.

Architecture diagram showing EC2 and Fargate launch types for ECS

Architecture diagram showing EC2 and Fargate launch types for ECS

Each launch type also comes with its own security considerations. While Fargate provides ECS tasks with strong isolation boundaries, when running ECS workloads on EC2 task-level isolation is much weaker. To mitigate these concerns, AWS provides guidance on best practices and hardening ECS on EC2 workloads.

“Unlike Fargate, ECS does not offer task isolation on customer- owned instances. Each task running on Fargate has its own isolation boundary and does not share the underlying kernel, CPU resources, memory resources, or elastic network interface with another task. For EC2 and External Container Instances on ECS, there is no task isolation. Since containers are not a security boundary, they can potentially access any data on the instance, including data associated with ECS agent and data associated with other containers and tasks on the container instance, including credentials and other sensitive data. Therefore, the customer will have to manage the ECS agent and worker node as part of their configuration and management operations and account for that in their security models.”

One critical hardening consideration is the restriction of container access to EC2 Instance Metadata Service (IMDS). Our research into configuration hardening identified that the nuances of restricting IMDS access for a secure ECS on EC2 architecture warrant a more detailed examination than is currently available in the official guidance. In this post, we will cover these nuances and address the gaps to provide a more comprehensive picture of how to securely configure ECS workloads on EC2.

The Problem: Why Harden IMDS Access?

As previously mentioned, ECS tasks running on EC2 instances offer weak task-level isolation, which can lead to unauthorized access to ECS agents, cross-tasks credentials, and data access among other problems. We have seen a number of attack vectors targeting ECS tasks which allow for credential theft and privilege escalation. For example, using ECS task definitions for privilege escalation, accessing ECS task credentials by overriding task definition, etc.

A recently disclosed attack vector, ECScape, demonstrates the

risk of privilege escalation when ECS tasks share the same underlying EC2 host.

In this attack, a malicious container with low-privileged IAM task role could

steal the credentials of a container with higher-privilege task role by

impersonating the ECS agent. The impersonation leverages an

undocumented web socket protocol to communicate to the ECS control plane. One

of the jobs of the ECS control plane is to push credentials of running tasks to

the ECS agent. Upon impersonation, the control plane believes it is

communicating with a legitimate ECS agent and delivers credentials for all

tasks on that host to the malicious container. To successfully pose as the ECS

agent, the attacker first retrieves EC2 instance profile credentials by

querying the EC2 Instance Metadata Service (IMDS) endpoint

(http://169.254.169.254/latest/meta-data/iam/security-credentials/{InstanceProfileName})

from within the compromised container.

With the onus of hardening configuration on the AWS customer, one major responsibility is to block access to EC2 IMDS. In its default configuration, ECS tasks can access EC2 metadata to fetch the container instance role (EC2 profile instance role) permissions, as well as leverage that to escalate privilege and get cross-task role access (as highlighted in ECScape attack vector). In fact, AWS updated the documentation to specifically highlight this responsibility in the light of ECScape:

“For EC2 and External Container Instances on ECS, there is no task isolation (unlike with Fargate) and containers can potentially access credentials for other tasks on the same container instance. They can also access permissions assigned to the ECS container instance role. Follow the recommendations in Roles recommendations to block access to the Amazon EC2 Instance Metadata Service for containers”

Before we delve into blocking IMDS access for ECS tasks, it is important to review the available networking modes.

Refresher: Networking Modes for ECS Tasks on EC2

When running ECS tasks on EC2 instances, there are different networking options available for the ECS task. Here is a summary of the different modes (for linux containers):

awsvpc: In this mode, the task is allocated an elastic network interface (ENI). This is also the recommended mode to use as it allows greater control over network traffic restrictions. (See section: Note on AWSVPC Mode)bridge: ECS tasks use Docker’s built-in virtual network. This is the default network mode.host: In the host mode, tasks would map container ports directly to EC2 instance’s ENI.none: Tasks have no network connectivity in this mode. There is not much to consider with this mode as no network connectivity means no IMDS access as well as no IAM task role access.

Blocking IMDS Access

It is quite clear now that ECS tasks on EC2 must be blocked from accessing EC2’s IMDS. So how should this be done in practice?

How about IMDSv2?

In the past few years, there has been more and more push to

move toward IMDSv2 (a session-oriented method to fetch

metadata). Newly released EC2 instance types only support

IMDSv2 and it has also been made the default

choice for AWS Management Console Quick Starts and other

launch pathways. With IMDSv2, it is common to have a hop limit set to 1 (hop

limit being the number of network hops that the PUT response for the

/api/token request is allowed to make).

With IMDSv2 and the hop limit set to 1, one might reasonably assume that ECS tasks would not be able to access the EC2 IMDS, as metadata responses would only be able to travel 1 hop (-> to the EC2 instance). In fact, AWS explicitly calls out that having the hop limit set to 1 might be problematic for container environments:

“The AWS SDKs use IMDSv2 calls by default. If the IMDSv2 call receives no response, some AWS SDKs retry the call and, if still unsuccessful, use IMDSv1. This can result in a delay, especially in a container environment. For those AWS SDKs that require IMDSv2, if the hop limit is 1 in a container environment, the call might not receive a response at all because going to the container is considered an additional network hop."

However, upon testing, Latacora found that requiring IMDSv2 with a hop limit of

1 isn’t quite a silver bullet approach to blocking IMDS access to EC2 tasks.

The table below shows the status of ECS tasks in different networking modes,

accessing EC2 metadata via IMDS endpoint (169.254.169.254) when IMDSv2 is

enabled and hop limit is set to 1 for the EC2 instance:

| ECS Task Networking Mode | Can Access EC2 metadata via IMDS endpoint |

|---|---|

awsvpc | Yes |

bridge | No |

host | Yes |

Using IMDSv2 and setting the hop limit to 1 only blocks IMDS access for

bridge networking mode. This is because in bridge mode ECS tasks’

networking interface comes under second hop from the IMDS endpoint. Other

modes, awsvpc and host, are still able to access EC2 metadata, as their

networking interface reachability is only 1 hop by the instance metadata

endpoint. Here are some screenshots of the IMDSv2 test for awsvpc networking

mode:

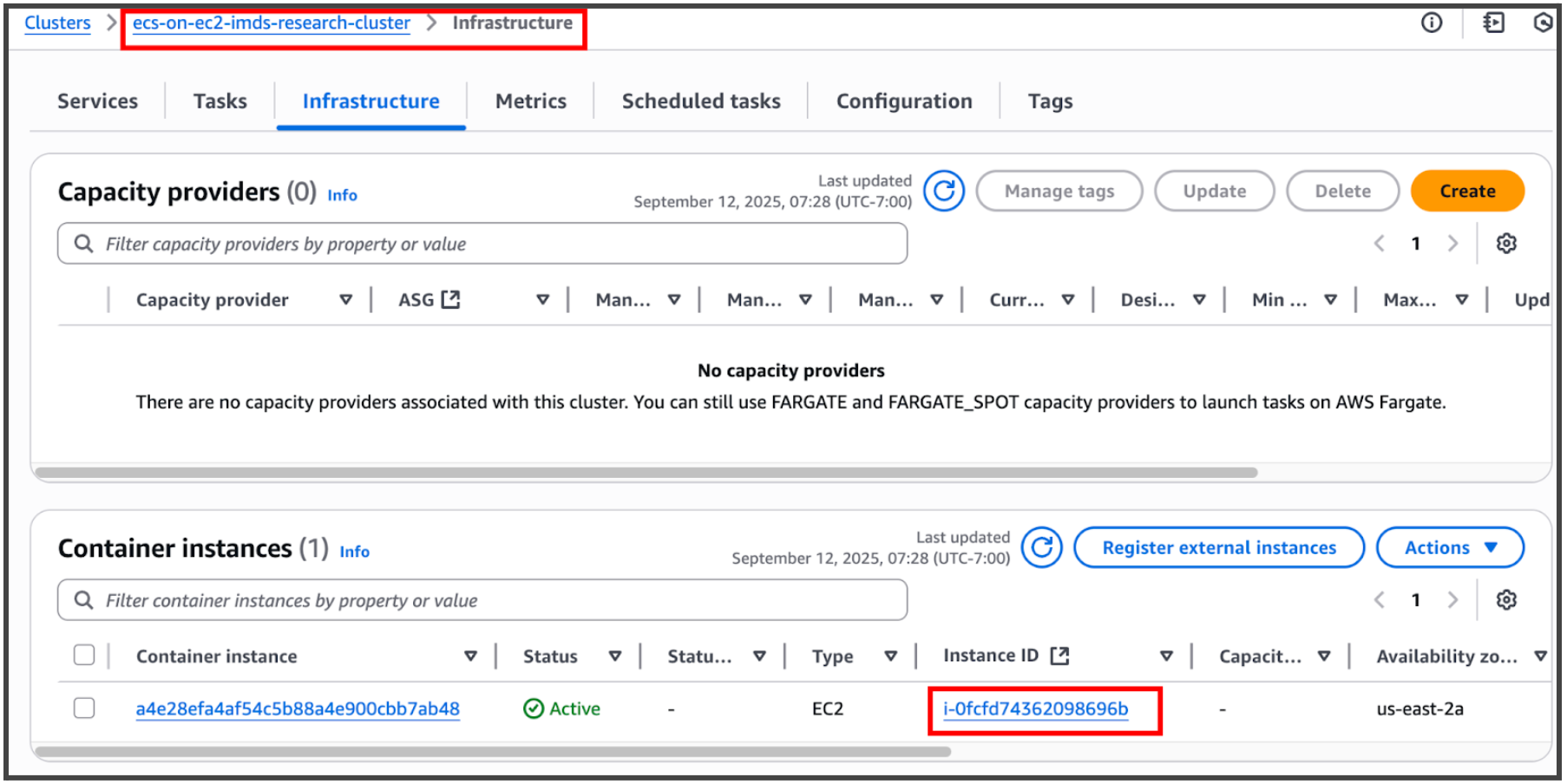

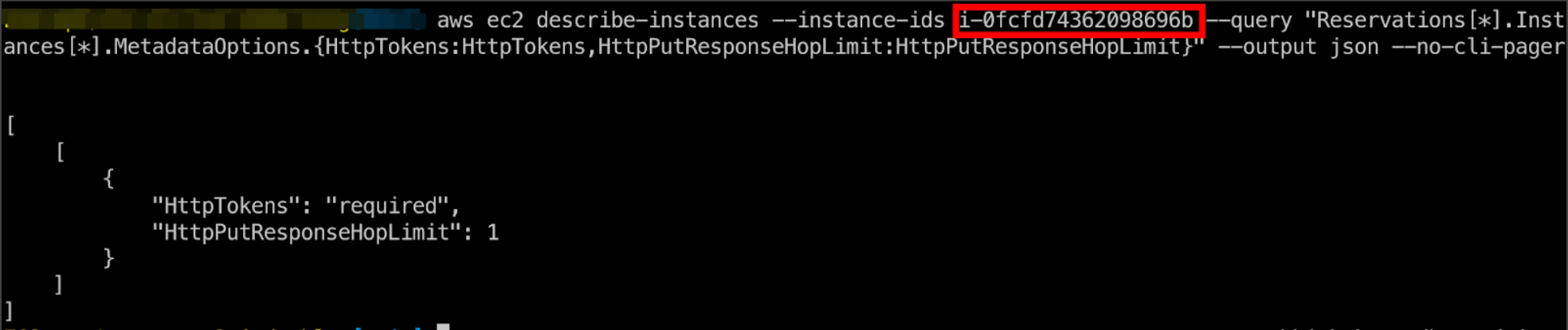

EC2 instance for ECS cluster with IMDSv2 and hop limit set to 1

EC2 instance for ECS cluster with IMDSv2 and hop limit set to 1

## Launching ECS task in awsvpc network mode

aws ecs run-task --cluster ecs-on-ec2-imds-research-cluster \

--task-definition arn:aws:ecs:us-east-2:08189XXXX:task-definition/ecs-on-ec2-imds-research-awsvpc-task-family:1 \

--count 1 --launch-type EC2 --network-configuration "awsvpcConfiguration={subnets=[subnet-09e81bdXXXXXX],securityGroups=[sg-09a2cbf7XXXXXX]}"

ECS task successfully fetching EC2 instance profile credentials when on awsvpc network mode (with IMDSv2 and the hop limit set to 1)

So How to Actually Block IMDS Access?

Since IMDSv2 alone doesn’t fully mitigate the risk, here is how you can block ECS tasks, across various network modes, from accessing EC2’s IMDS:

awsvpc:- As per AWS docs you can set the

ECS_AWSVPC_BLOCK_IMDSvariable value totruein ECS agent configuration file:/etc/ecs/ecs.config

- As per AWS docs you can set the

bridge:- Approach 1: We saw above that requiring IMDSv2 (with hop limit set to 1) is effective to block IMDS access to tasks.

- Approach 2: Alternatively, as per AWS docs, you can also update EC2 instance’s iptables rules as follows:

sudo yum install -y iptables-services; sudo iptables --insert DOCKER-USER 1 \

--in-interface docker+ --destination 169.254.169.254/32 --jump DROP

host:- For

hostmode, AWS suggests setting theECS_ENABLE_TASK_IAM_ROLE_NETWORK_HOSTvariable tofalsein the ECS agent configuration file:/etc/ecs/ecs.config. While testing this, we found a caveat. AWS documentation also states that theECS_ENABLE_TASK_IAM_ROLE_NETWORK_HOSTparameter should be set totrueif we want the ECS task to use IAM task roles. This creates an impasse where if you set the parameter totruethen the ECS task in host mode is able to use the IAM task role but is also able to access EC2’s IMDS. On the other hand, setting this tofalserestricts access to IMDS endpoint but also restricts the task’s ability to use the IAM task role. - Right now, any effective strategy to block IMDS access in

hostnetwork mode, while keeping it functional via IAM task roles does not exist. You could theoretically limit IMDS access at the process level via firewall rules and packet filtering, but that would have to be at runtime.

- For

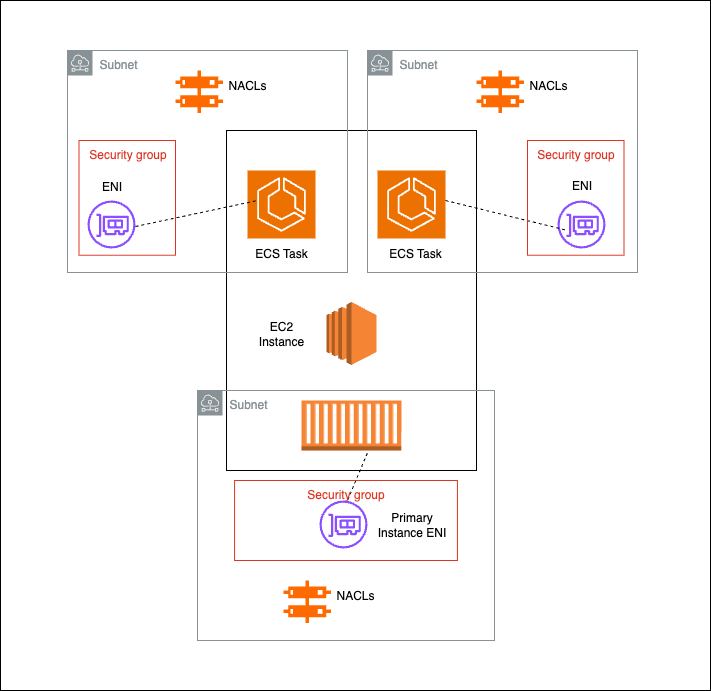

Note on AWSVPC Mode

While awsvpc mode is the recommended mode, there are

some specificities worth highlighting. For each ECS task launched with the

awsvpc network mode, a corresponding elastic network interface

(ENI) is created and attached to the

host EC2 instance.

Architecture diagram showing

Architecture diagram showing awsvpc ECS tasks on EC2 instance with each task

having its own subnet, ENI and associated components

However, EC2 instances have a limit on the number of ENIs that can be attached, which is determined by the instance type for example, t3.micro can have only 2 ENIs. This limits how many tasks can run on a single EC2 instance. Since EC2 instances are launched with their own primary ENI, an instance with a capacity of say 2 ENIs can run only 1 ECS task.

ENIs provide awsvpc ECS tasks with flexibility and control over networking

(for example: attaching security groups to ENIs). Since ECS tasks have their

own ENIs, tasks can be “placed” in different subnets (as shown in figure

above), which would allow for customized traffic rules across tasks using

network access control list (NACL) rules.

However, even with this level of control it is not possible to block IMDS

access for awsvpc tasks at the network level. AWS exposes the EC2 IMDS

endpoint within customer VPCs on link-local

addresses over underlying

hypervisors. As such,

NACLs and security

groups cannot be used to block IMDS access.

Conclusion

While hardening IMDS configuration is a very important security consideration for AWS customers running ECS on EC2 workloads, a lack of clear guidance and insecure defaults (ECS tasks having access to EC2 IMDS by default) increases the complexity of the process. In the longer term using Fargate, with its stronger isolation boundaries, would help avoid the pitfalls of ECS on EC2 altogether.

The recently released Amazon ECS Managed Instances

feature supports both awsvpc and host network modes. While AWS doesn’t

officially document this, our testing identified that for the awsvpc mode,

the ECS_AWSVPC_BLOCK_IMDS variable is set to true by default (via the

Bottlerocket awsvpc-block-imds setting), which blocks

access to IMDS.

Terraform code associated with this post can be found in this GitHub repository: https://github.com/latacora/ecs-on-ec2-gaps-in-imds-hardening. It can be used for running ECS workloads on EC2 instances in various IMDS configurations and different network modes to test the patches discussed above.